核心交易分析之Datax数据导出与小结

数据导出

ads.ads_trade_order_analysis 分区表,使用DataX导出到MySQL

-

在MySQL创建对应的表

1

2

3

4

5

6

7

8

9

10

11

12

13drop table if exists dwads.ads_trade_order_analysis;

create table dwads.ads_trade_order_analysis(

areatype varchar(100), -- 区域范围:区域类型(全国、大 区、城市)

regionname varchar(100), -- 区域名称

cityname varchar(100), -- 城市名称

categorytype varchar(100), -- 商品分类类型(一级、二级)

category1 varchar(100), -- 商品一级分类名称

category2 varchar(100), -- 商品二级分类名称

totalcount BIG INT, -- 订单数量

total_productnum BIG INT, -- 商品数量

totalmoney double,

dt varchar(10)

); -

创建配置文件(json)

创建/data/script/trade/ads_trade_order_analysis.json

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96{

"job":{

"setting":{

"speed":{

"channel":1

}

},

"content":[

{

"reader":{

"name":"hdfsreader",

"parameter":{

"path":"/user/hive/warehouse/ads.db/ads_trade_order_analysis/dt=$do _date/*",

"defaultFS":"hdfs://192.168.91.121:9000",

"column":[

{

"index":0,

"type":"string"

},

{

"index":1,

"type":"string"

},

{

"index":2,

"type":"string"

},

{

"index":3,

"type":"string"

},

{

"index":4,

"type":"string"

},

{

"index":5,

"type":"string"

},

{

"index":6,

"type":"string"

},

{

"index":7,

"type":"string"

},

{

"index":8,

"type":"string"

},

{

"type":"string",

"value":"$do_date"

}

],

"fileType":"text",

"encoding":"UTF-8",

"fieldDelimiter":","

}

},

"writer":{

"name":"mysqlwriter",

"parameter":{

"writeMode":"insert",

"username":"hive",

"password":"12345678",

"column":[

"areatype",

"regionname",

"cityname",

"categorytype",

"category1",

"category2",

"totalcount",

"total_productnum",

totalmoney",

"dt"

],

"preSql":[

"delete from ads_trade_order_analysis where dt='$do_date'"

],

"connection":[

{

"jdbcUrl":"jdbc:mysql://192.168.91.123:3306/dwads?useUnicode=true&characterEncoding=utf-8",

"table":[

"ads_trade_order_analysis"

]

}

]

}

}

}

]

}

} -

执行命令,使用json配置文件;测试

1

python /data/modules/datax/bin/datax.py -p "-Ddo_date=2020-07-12" /data/script/trade/ads_trade_order_analysis.json

-

编写执行脚本(shell)

创建/data/script/trade/ads_trade_order_analysis.sh

1

2

3

4

5

6

7

8

9

10

11

12#!/bin/bash

source /etc/profile

JSON=/data/script

if [ -n "$1" ]

then

do_date=$1

else

do_date=`date -d "-1 day" +%F`

fi

python $DATAX_HOME/bin/datax.py -p "-Ddo_date=$do_date" $JSON/trade/ads_trade_order_analysis.json -

shell脚本的测试

1

sh /data/script/trade/ads_trade_order_analysis.sh 2020-07-12

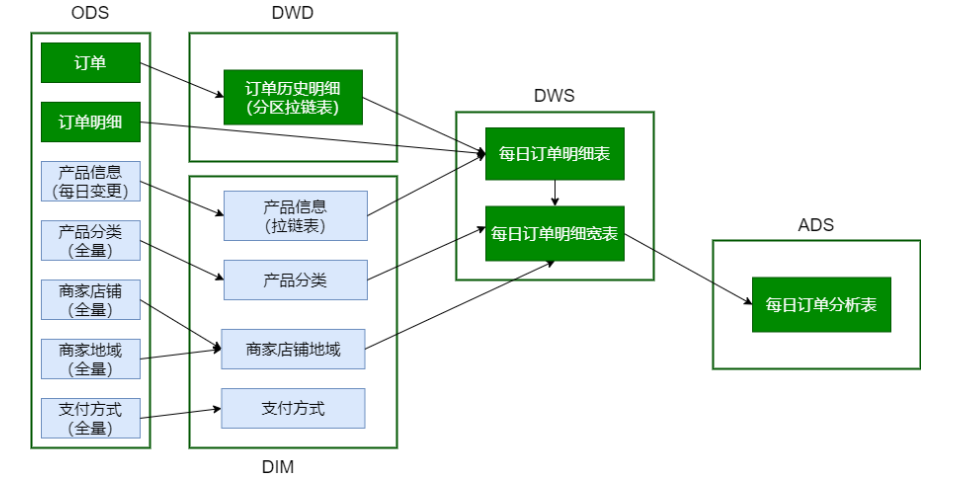

小结

脚本调用次序:

1 | # 加载ODS数据(含DataX迁移数据) |

主要技术点:

-

拉链表。创建、使用与回滚;商品信息表、订单表(周期性事实表;分区表+拉链表)

-

宽表(逆规范化):商品分类表、商品地域组织表、订单明细及订单明细宽表(轻度汇总的事实表)

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 WeiJia_Rao!