Spark开发环境搭建IDEA

前提:安装scala插件;能读写HDFS文件

-

- 创建maven项目,修改java目录为scala,添加项目框架scala

-

- pom.xml添加依赖

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101<properties>

<maven.compiler.source>8</maven.compiler.source>

<maven.compiler.target>8</maven.compiler.target>

<scala.version>2.12.10</scala.version>

<spark.version>2.4.5</spark.version>

<hadoop.version>2.9.2</hadoop.version>

<encoding>UTF-8</encoding>

</properties>

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.12</artifactId>

<version>${spark.version}</version>

</dependency>

</dependencies>

<build>

<pluginManagement>

<plugins>

<!-- 编译scala的插件 -->

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.2</version>

</plugin>

<!-- 编译java的插件 -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.5.1</version>

</plugin>

</plugins>

</pluginManagement>

<plugins>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<executions>

<execution>

<id>scala-compile-first</id>

<phase>process-resources</phase>

<goals>

<goal>add-source</goal>

<goal>compile</goal>

</goals>

</execution>

<execution>

<id>scala-test-compile</id>

<phase>process-test-resources</phase>

<goals>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<executions>

<execution>

<phase>compile</phase>

<goals>

<goal>compile</goal>

</goals>

</execution>

</executions>

</plugin>

<!-- 打jar插件 -->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>2.4.3</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<filters>

<filter>

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build> -

- 在resource目录下创建core-site.xml,内容为虚拟机环境上Hadoop的配置文件core-site.xml

-

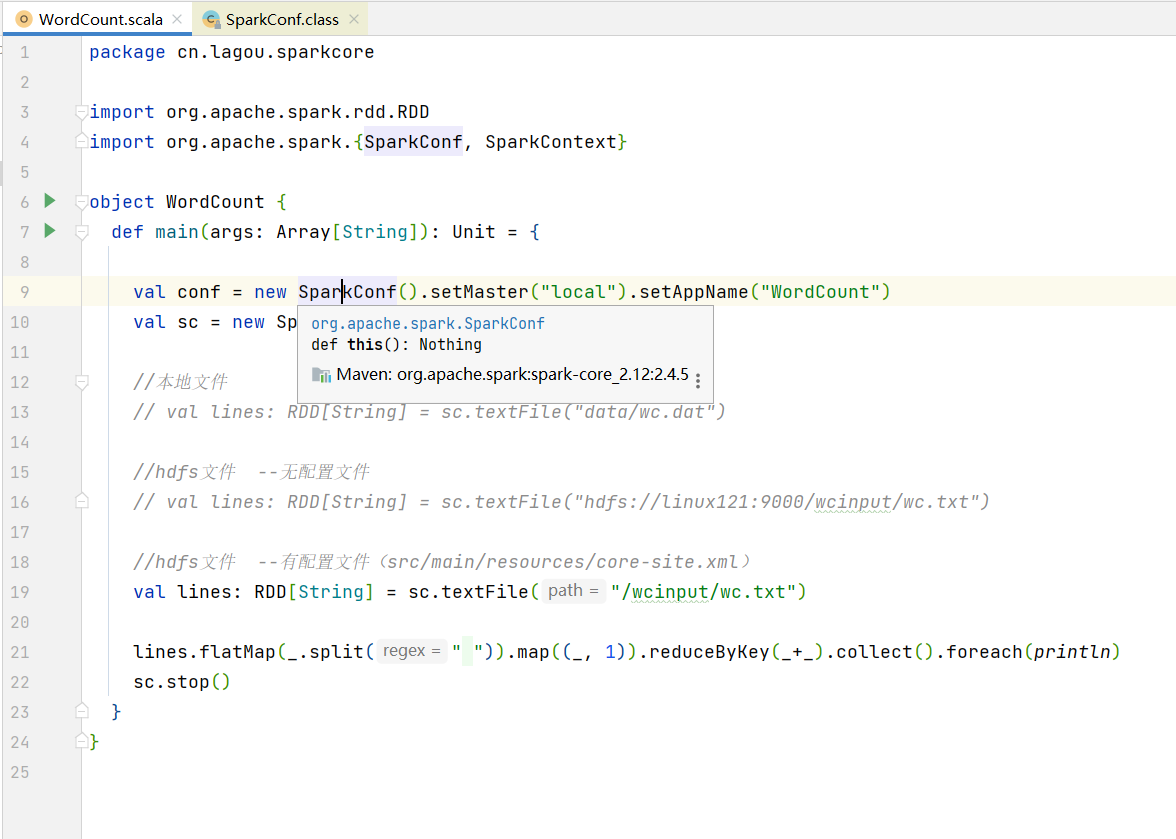

- 创建scal对象WordCount

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24package cn.lagou.sparkcore

import org.apache.spark.rdd.RDD

import org.apache.spark.{SparkConf, SparkContext}

object WordCount {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setMaster("local").setAppName("WordCount")

val sc = new SparkContext(conf)

//本地文件

// val lines: RDD[String] = sc.textFile("data/wc.dat")

//hdfs文件 --无配置文件

// val lines: RDD[String] = sc.textFile("hdfs://linux121:9000/wcinput/wc.txt")

//hdfs文件 --有配置文件(src/main/resources/core-site.xml)

val lines: RDD[String] = sc.textFile("/wcinput/wc.txt")

lines.flatMap(_.split(" ")).map((_, 1)).reduceByKey(_+_).collect().foreach(println)

sc.stop()

}

} -

- 链接源码

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 WeiJia_Rao!