使⽤Yum⽅式安装Impala后,impala-shell可以全局使⽤;

示例

1. 进⼊impala-shell命令⾏

impala-shell进⼊到impala的交互窗⼝

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@linux123 conf]# impala-shell Connected to linux123:21000 Server version: impalad version 2.3.0-cdh5.5.0 RELEASE (build 0c891d79aa38f297d244855a32f1e17280e2129b) ****************************************************************************** ***** Welcome to the Impala shell. Copyright (c) 2015 Cloudera, Inc. All rights reserved. (Impala Shell v2.3.0-cdh5.5.0 (0c891d7) built on Mon Nov 9 12:18:12 PST 2015) The SET command shows the current value of all shell and query options. ****************************************************************************** ***** [linux123:21000] >

2. 查看所有数据库

1 2 3 4 5 6 7 8 9 [linux123:21000] > show databases; Query: show databases +------------------+ | name | +------------------+ | _impala_builtins | | default | | | +------------------+

如果想要使⽤Impala ,需要将数据加载到Impala中,如何加载数据到Impala中呢?

3. 加载数据

创建user.csv文件,文件内容如下:

1 2 3 4 5 6 7 8 9 cd /root/hadoop/data/ vim user.csv 392456197008193000,张三,20,0 267456198006210000,李四,25,1 892456199007203000,王五,24,1 492456198712198000,赵六,26,2 392456197008193000,张三,20,0 392456197008193000,张三,20,0

1 2 3 4 hadoop fs -mkdir -p /user/impala/t1 #上传本地user.csv到hdfs /user/impala/table1 hadoop fs -put user.csv /user/impala/t1

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 #进⼊impala-shell impala-shell #表如果存在则删除 drop table if exists t1; #执⾏创建 create external table t1( id string, name string, age int, gender int ) row format delimited fields terminated by ',' location '/user/impala/t1';

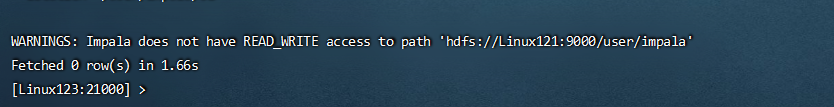

Impala操作HDFS使⽤的是Impala⽤户,所以为了避免权限问题,我们可以选择关闭权限校验

在Linx121的hdfs-site.xml中添加如下配置:

1 2 3 4 5 <!--关闭hdfs权限校验 --> <property> <name>dfs.permissions.enabled</name> <value>false</value> </property>

若还是报以上错误,可以开放hdfs文件的权限,Impala-shell窗口刷新

1 2 3 hdfs dfs -chmod 777 /user/impala invalidate metadata;

1 2 3 4 5 6 7 8 9 10 11 12 [linux122:21000] > select * from t1; Query: select * from t1 +--------------------+------+-----+--------+ | id | name | age | gender | +--------------------+------+-----+--------+ | 392456197008193000 | 张三 | 20 | 0 | | 267456198006210000 | 李四 | 25 | 1 | | 892456199007203000 | 王五 | 24 | 1 | | 492456198712198000 | 赵六 | 26 | 2 | | 392456197008193000 | 张三 | 20 | 0 | | 392456197008193000 | 张三 | 20 | 0 | +--------------------+------+-----+--------+

1 2 3 4 5 6 7 8 9 10 11 12 #创建⼀个内部表 create table t2( id string, name string, age int, gender int ) row format delimited fields terminated by ','; #查看表结构 desc t1; desc formatted t2;

1 2 3 4 5 6 7 8 9 10 11 12 insert overwrite table t2 select * from t1 where gender =0; #验证数据 [linux122:21000] > select * from t2; Query: select * from t2 +--------------------+------+-----+--------+ | id | name | age | gender | +--------------------+------+-----+--------+ | 392456197008193000 | 张三 | 20 | 0 | | 392456197008193000 | 张三 | 20 | 0 | | 392456197008193000 | 张三 | 20 | 0 | +--------------------+------+-----+--------+

4. 更新元数据

连接Hive查看Hive中的数据,发现通过Impala创建的表,导⼊的数据都可以被Hive感知到。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 show tables; +-----------+ | tab_name | +-----------+ | t1 | | t2 | +-----------+ select * from t1; +---------------------+----------+---------+------------+ | t1.id | t1.name | t1.age | t1.gender | +---------------------+----------+---------+------------+ | 392456197008193000 | 张三 | 20 | 0 | | 267456198006210000 | 李四 | 25 | 1 | | 892456199007203000 | 王五 | 24 | 1 | | 492456198712198000 | 赵六 | 26 | 2 | | 392456197008193000 | 张三 | 20 | 0 | | 392456197008193000 | 张三 | 20 | 0 | +---------------------+----------+---------+------------+

5. ⼩结

上⾯案例中Impala的数据⽂件我们准备的是以逗号分隔的⽂本⽂件,实际上,Impala可以⽀持RCFile,SequenceFile,Parquet等多种⽂件格式。

Impala与Hive元数据的关系?

Impala同步Hive元数据命令:⼿动执⾏invalidate metadata ,(后续详细讲解)

Impala是通过Hive的metastore服务来访问和操作Hive的元数据,但是Hive对表进⾏创建删除修改等操作,Impala是⽆法⾃动识别到Hive中元数据的变更情况的,如果想让Impala识别到Hive元数据的变化,所以需要进⼊impala-shell之后⾸先要做的操作就是执⾏invalidate metadata,该命令会将所有的Impala的元数据失效并重新从元数据库同步元数据信息。后⾯详细讲解元数据更新命令。