HDFS的Shell客户端操作

Shell 命令行操作HDFS

-

基本语法

1

hdfs dfs 具体命令 OR bin/hdfs dfs 具体命令

-

命令大全

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51[root@linux121 hadoop-2.9.2]# bin/hdfs dfs

Usage: hadoop fs [generic options]

[-appendToFile <localsrc> ... <dst>]

[-cat [-ignoreCrc] <src> ...]

[-checksum <src> ...]

[-chgrp [-R] GROUP PATH...]

[-chmod [-R] <MODE[,MODE]... | OCTALMODE> PATH...]

[-chown [-R] [OWNER][:[GROUP]] PATH...]

[-copyFromLocal [-f] [-p] [-l] [-d] <localsrc> ... <dst>]

[-copyToLocal [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-count [-q] [-h] [-v] [-t [<storage type>]] [-u] [-x] <path> ...]

[-cp [-f] [-p | -p[topax]] [-d] <src> ... <dst>]

[-createSnapshot <snapshotDir> [<snapshotName>]]

[-deleteSnapshot <snapshotDir> <snapshotName>]

[-df [-h] [<path> ...]]

[-du [-s] [-h] [-x] <path> ...]

[-expunge] [-find <path> ... <expression> ...]

[-get [-f] [-p] [-ignoreCrc] [-crc] <src> ... <localdst>]

[-getfacl [-R] <path>]

[-getfattr [-R] {-n name | -d} [-e en] <path>]

[-getmerge [-nl] [-skip-empty-file] <src> <localdst>]

[-help [cmd ...]]

[-ls [-C] [-d] [-h] [-q] [-R] [-t] [-S] [-r] [-u] [<path> ...]]

[-mkdir [-p] <path> ...]

[-moveFromLocal <localsrc> ... <dst>]

[-moveToLocal <src> <localdst>]

[-mv <src> ... <dst>]

[-put [-f] [-p] [-l] [-d] <localsrc> ... <dst>]

[-renameSnapshot <snapshotDir> <oldName> <newName>]

[-rm [-f] [-r|-R] [-skipTrash] [-safely] <src> ...]

[-rmdir [--ignore-fail-on-non-empty] <dir> ...]

[-setfacl [-R] [{-b|-k} {-m|-x <acl_spec>} <path>]|[--set <acl_spec> <path>]]

[-setfattr {-n name [-v value] | -x name} <path>]

[-setrep [-R] [-w] <rep> <path> ...]

[-stat [format] <path> ...]

[-tail [-f] <file>]

[-test -[defsz] <path>]

[-text [-ignoreCrc] <src> ...]

[-touchz <path> ...]

[-truncate [-w] <length> <path> ...]

[-usage [cmd ...]]

Generic options supported are:

-conf <configuration file> specify an application configuration file

-D <property=value> define a value for a given property

-fs <file:///|hdfs://namenode:port> specify default filesystem URL to use,overrides 'fs.defaultFS' property from configurations.

-jt <local|resourcemanager:port> specify a ResourceManager

-files <file1,...> specify a comma-separated list of files to be copied to the map reduce cluster

-libjars <jar1,...> specify a comma-separated list of jar files to be included in the classpath

-archives <archive1,...> specify a comma-separated list of archives to be unarchived on the compute machines

HDFS命令演示

-

启动Hadoop集群(方便后续的测试)

1

2[root@linux121 hadoop-2.9.2]$ sbin/start-dfs.sh

[root@linux123 hadoop-2.9.2]$ sbin/start-yarn.sh -

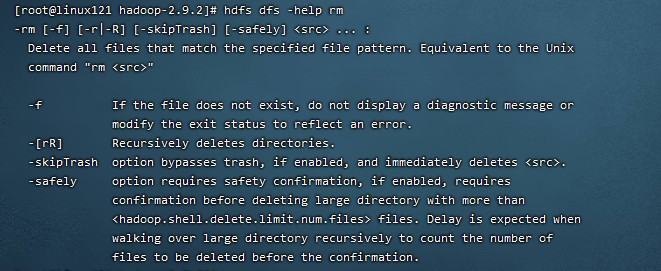

-help:输出这个命令参数

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -help rm

-

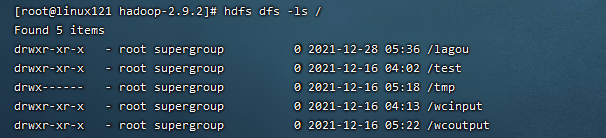

-ls: 显示HDFS的/下目录信息

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -ls /

-

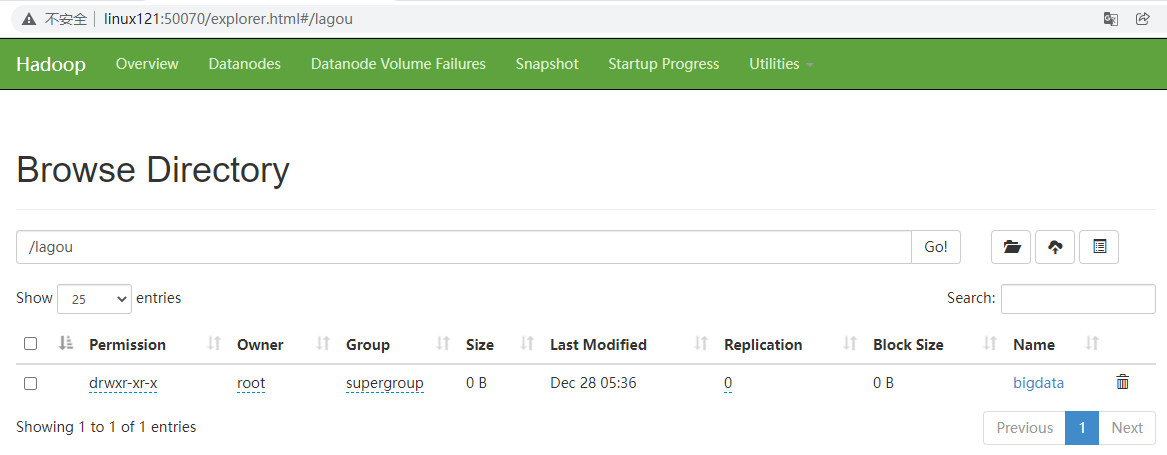

-mkdir:在HDFS上创建目录

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -mkdir -p /lagou/bigdata

-

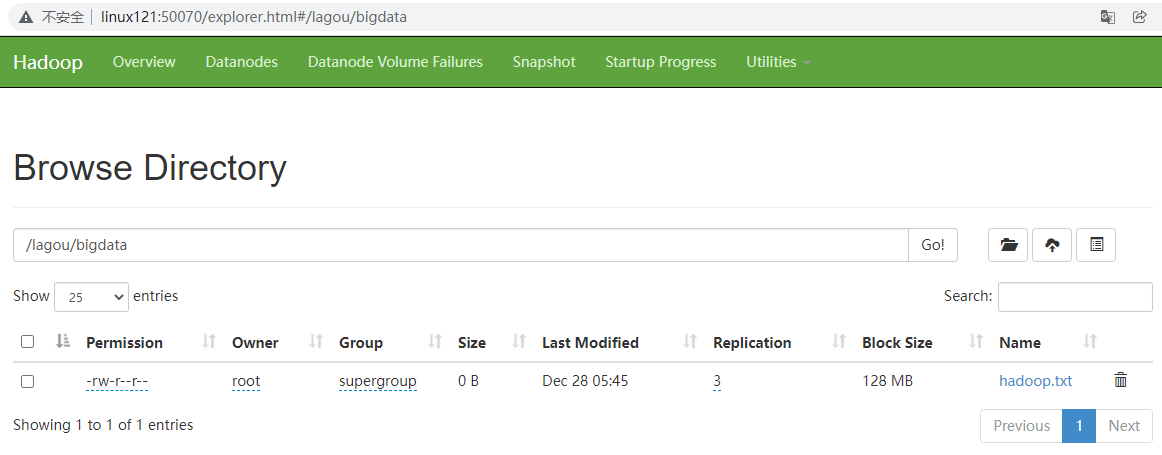

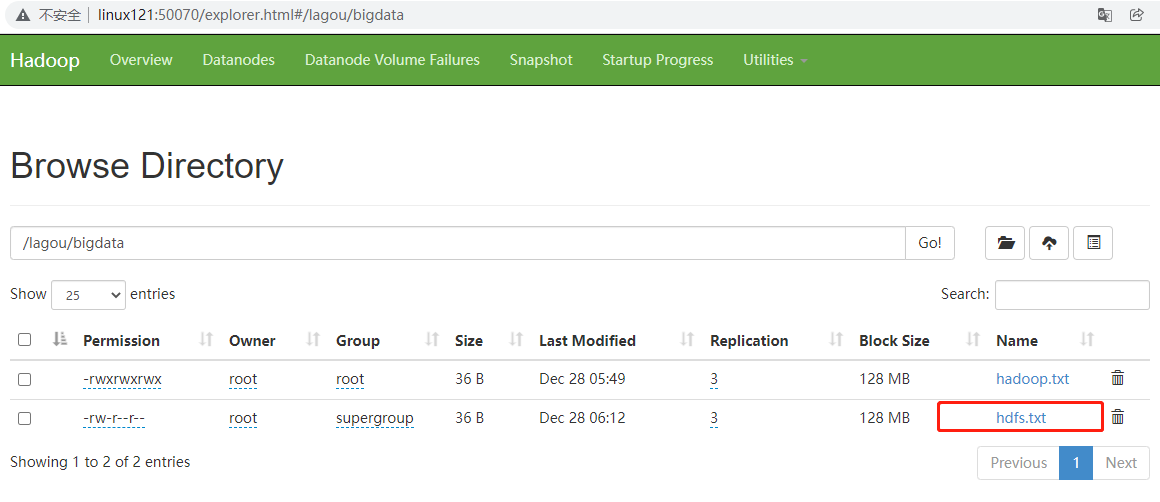

-moveFromLocal:从本地剪切粘贴到HDFS

1

2[root@linux121 hadoop-2.9.2]$ touch hadoop.txt

[root@linux121 hadoop-2.9.2]$ hdfs dfs -moveFromLocal ./hadoop.txt /lagou/bigdata

-

-appendToFile:追加一个文件到已经存在的文件末尾

1

2

3

4

5

6

7[root@linux121 hadoop-2.9.2]$ touch hdfs.txt

[root@linux121 hadoop-2.9.2]$ vi hdfs.txt

输入

namenode datanode block replication

[root@linux121 hadoop-2.9.2]$ hdfs dfs -appendToFile ./hdfs.txt /lagou/bigdata/hadoop.txt -

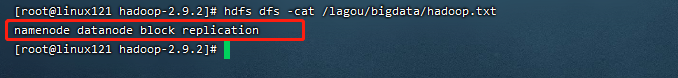

-cat:显示文件内容

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -cat /lagou/bigdata/hadoop.txt

-

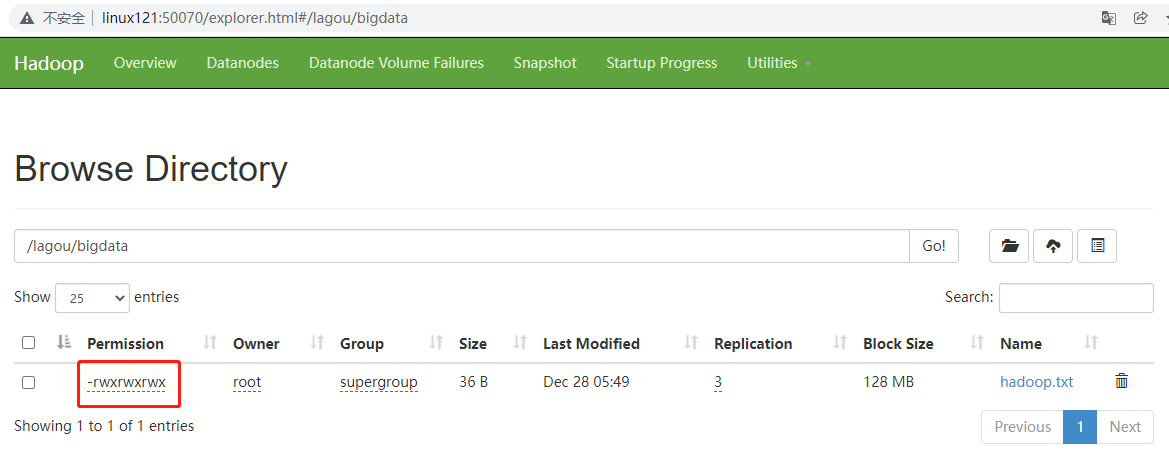

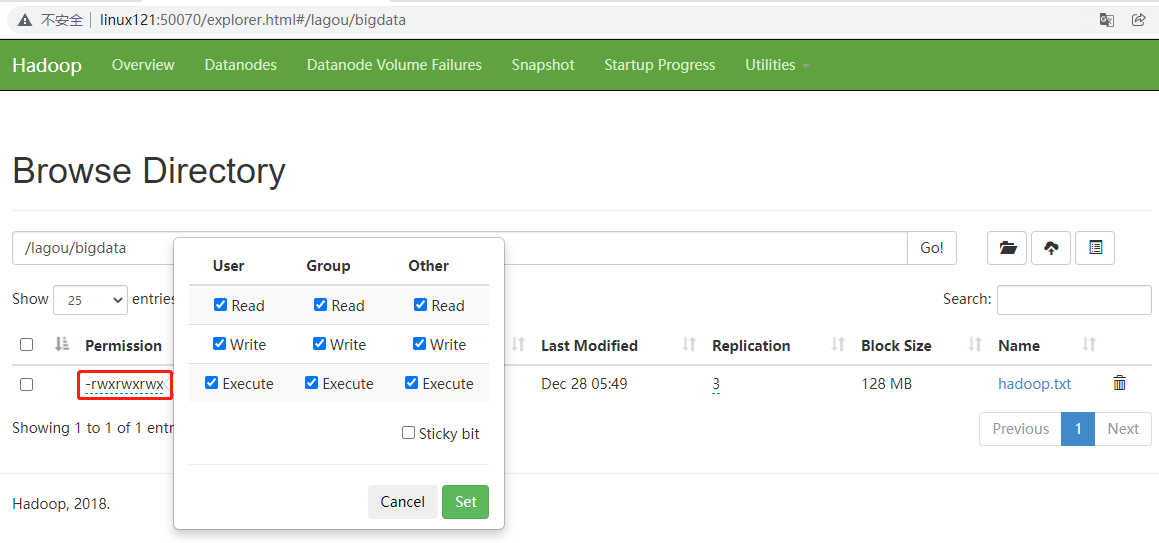

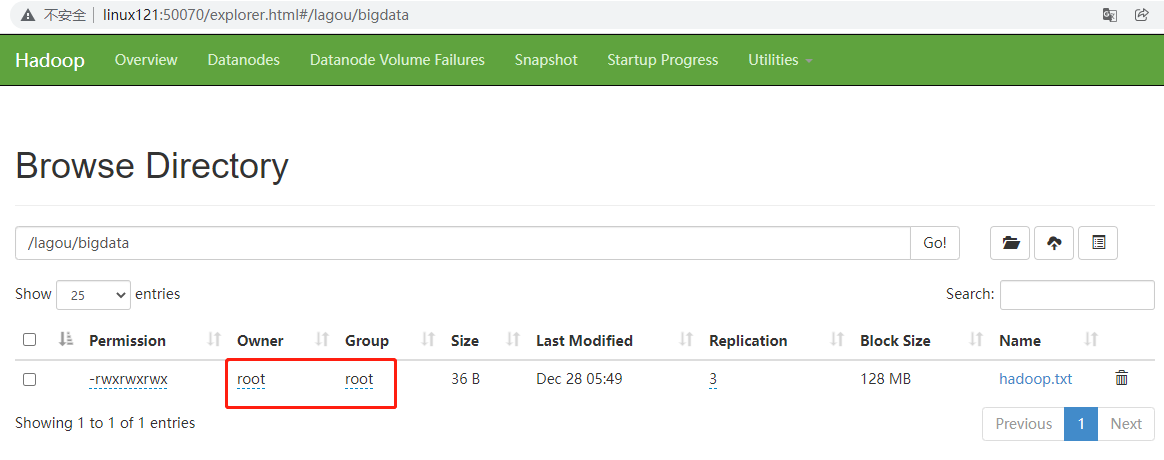

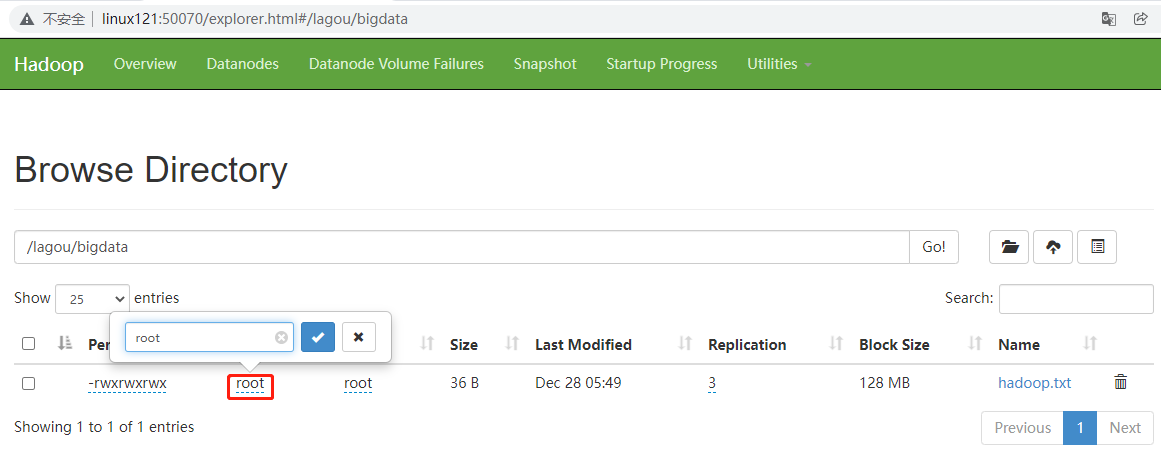

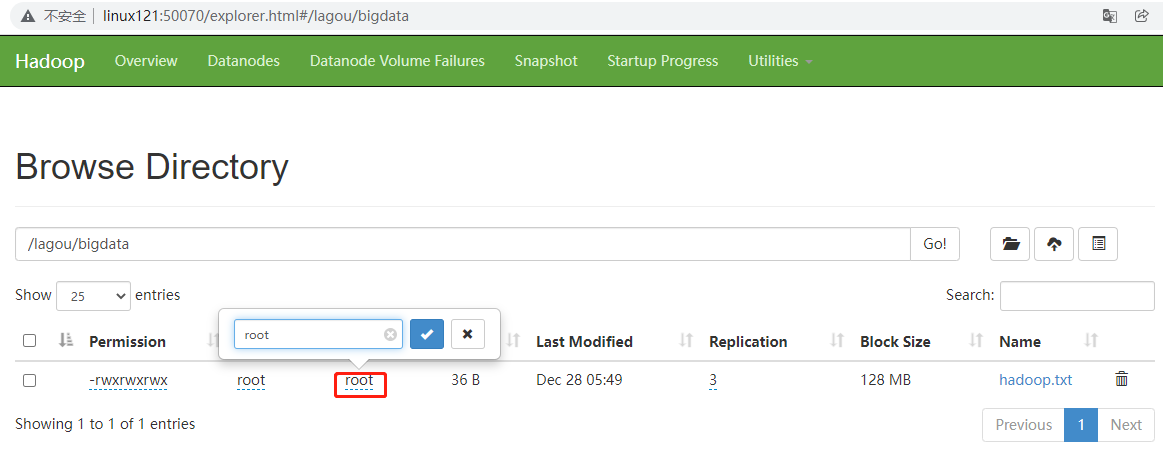

-chgrp 、-chmod、-chown:Linux文件系统中的用法一样,修改文件所属权限

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -chmod 777 /lagou/bigdata/hadoop.txt

也可以单击HDFS文件管理页面

1 | [root@linux121 hadoop-2.9.2]$ hdfs dfs -chown root:root /lagou/bigdata/hadoop.txt |

也可以单击HDFS文件管理页面

-

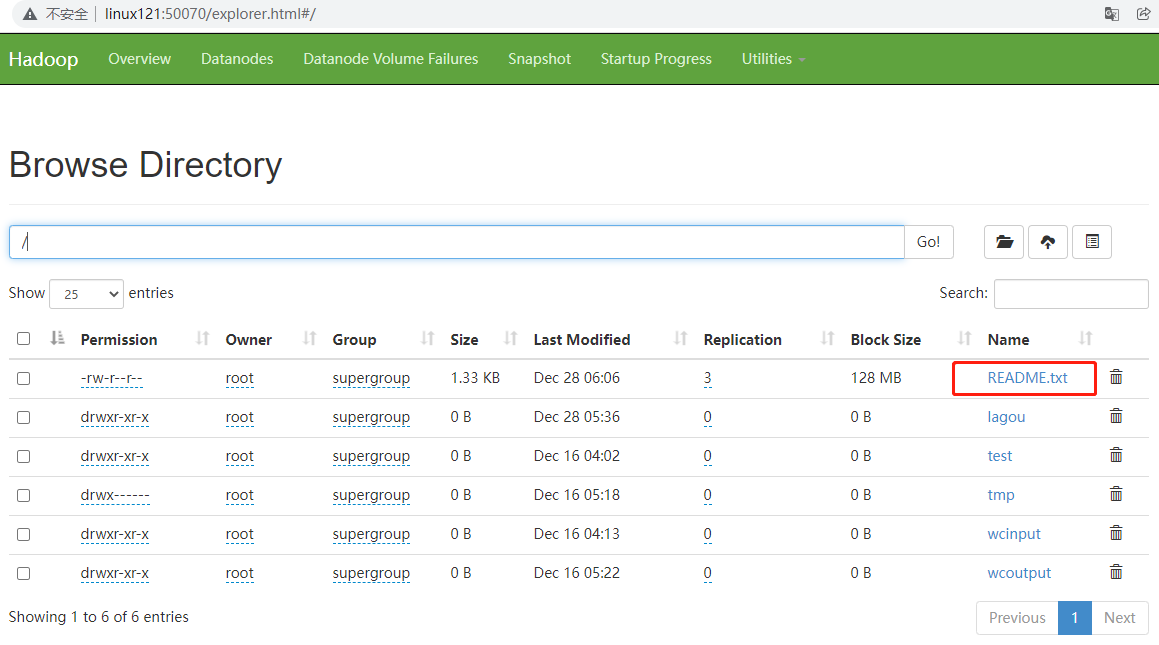

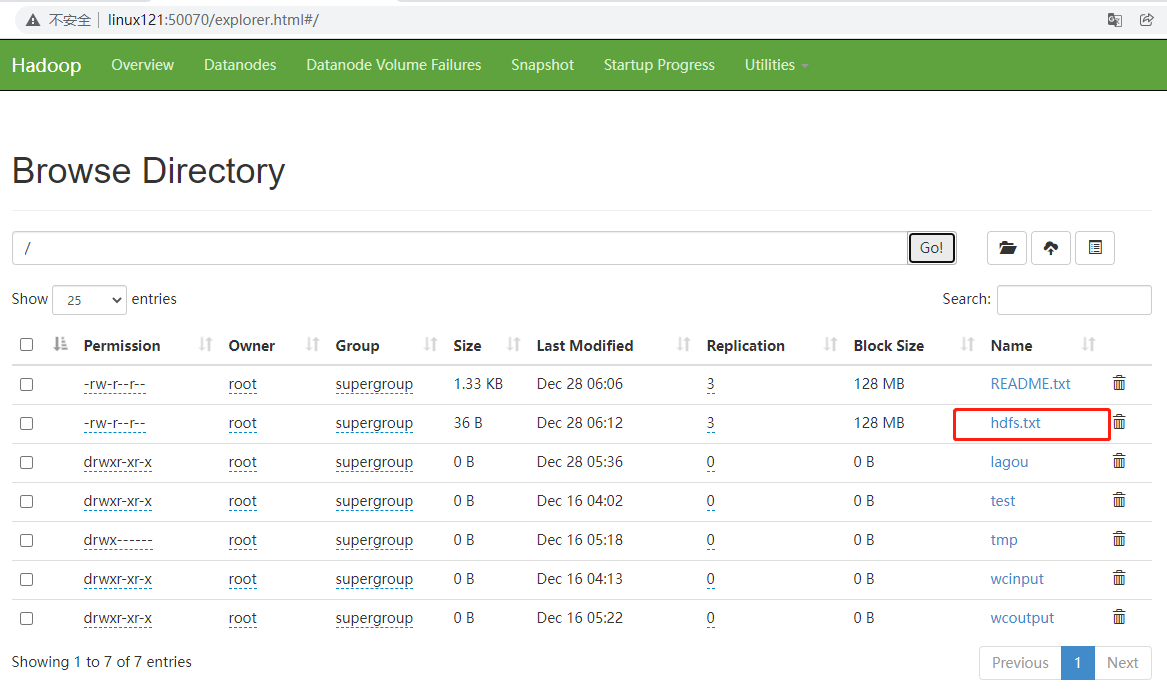

-copyFromLocal:从本地文件系统中拷贝文件到HDFS路径去

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -copyFromLocal README.txt /

-

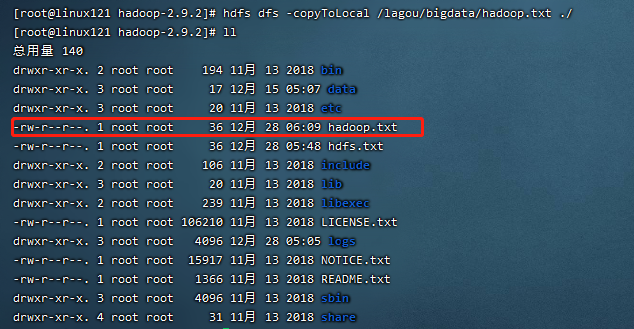

-copyToLocal:从HDFS拷贝到本地

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -copyToLocal /lagou/bigdata/hadoop.txt ./

-

-cp :从HDFS的一个路径拷贝到HDFS的另一个路径

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -cp /lagou/bigdata/hadoop.txt /hdfs.txt

-

-mv:在HDFS目录中移动文件

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -mv /hdfs.txt /lagou/bigdata/

-

-get:等同于copyToLocal,就是从HDFS下载文件到本地

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -get /lagou/bigdata/hadoop.txt ./

-

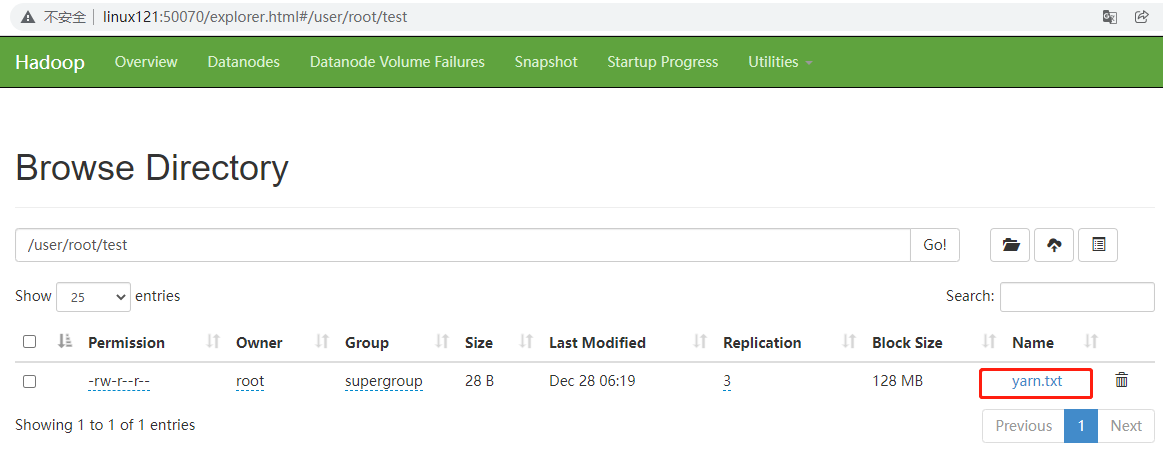

-put:等同于copyFromLocal

1

2

3

4

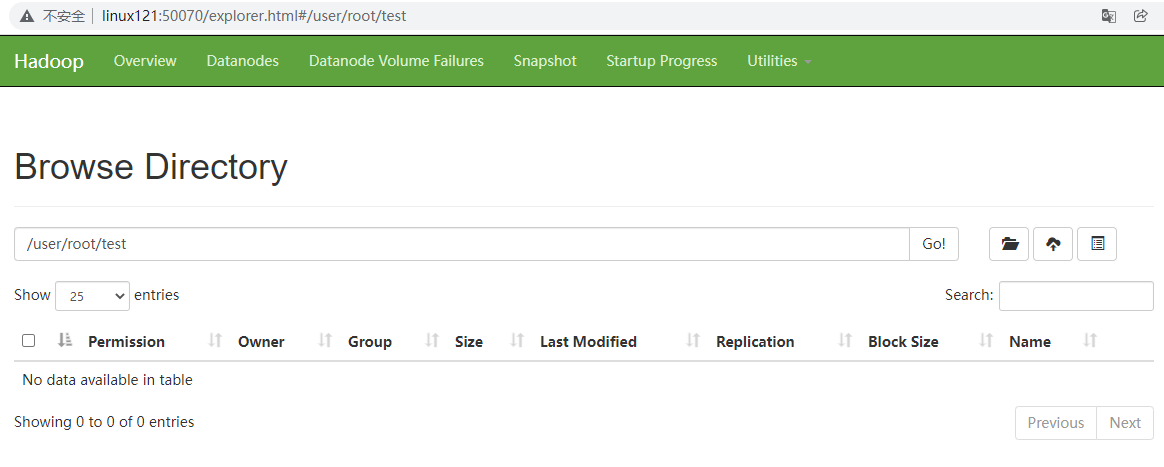

5[root@linux121 hadoop-2.9.2]$ hdfs dfs -mkdir -p /user/root/test/

#本地文件系统创建yarn.txt

[root@linux121 hadoop-2.9.2]$ vi yarn.txt

resourcemanager nodemanager

[root@linux121 hadoop-2.9.2]$ hdfs dfs -put ./yarn.txt /user/root/test/

-

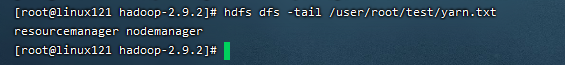

-tail:显示一个文件的末尾

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -tail /user/root/test/yarn.txt

-

-rm:删除文件或文件夹

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -rm /user/root/test/yarn.txt

-

-rmdir:删除空目录

1

2[root@linux121 hadoop-2.9.2]$ hdfs dfs -mkdir /test

[root@linux121 hadoop-2.9.2]$ hdfs dfs -rmdir /test -

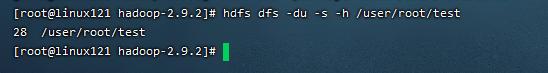

-du统计文件夹的大小信息

1

2#统计文件夹及其所属文件的字节大小

[root@linux121 hadoop-2.9.2]$ hdfs dfs -du -s -h /user/root/test

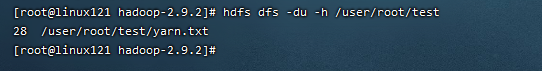

1

2#统计文件夹所属文件的字节大小

[root@linux121 hadoop-2.9.2]$ hdfs dfs -du -h /user/root/test

-

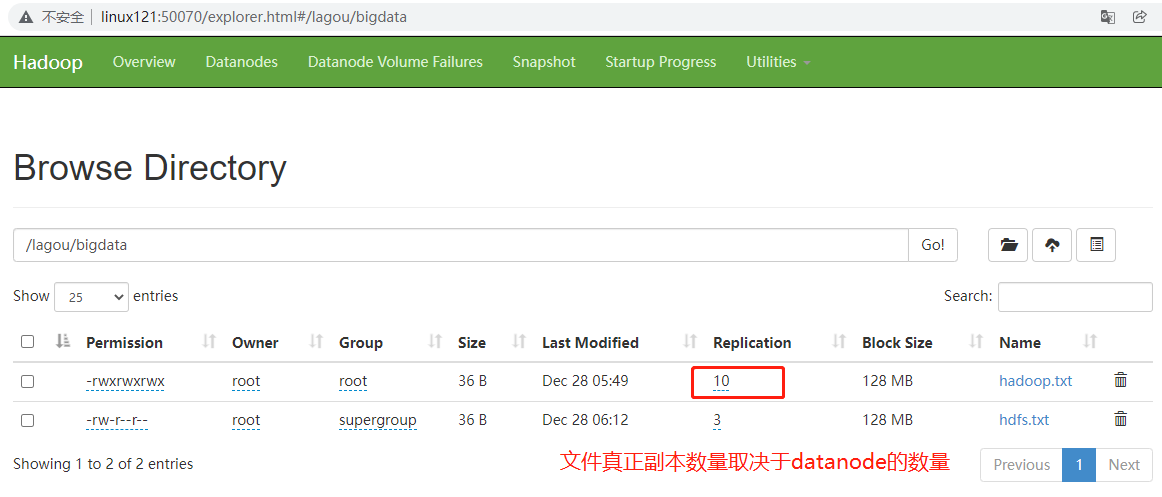

-setrep:设置HDFS中文件的副本数量

1

[root@linux121 hadoop-2.9.2]$ hdfs dfs -setrep 10 /lagou/bigdata/hadoop.txt

注意:这里设置的副本数只是记录在NameNode的元数据中,是否真的会有这么多副本,还得看DataNode的数量。因为目前只有3台设备,最多也就3个副本,只有节点数的增加到10台时,副本数才能达到10。

本博客所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自 WeiJia_Rao!