Linux安装部署KubeSphere基于k8s集群

安装部署k8s集群

可以安装多节点k8s集群(一主多从) 或者 安装高可用k8s集群(多主多从),高可用搭建可查看Linux安装部署高可用k8s集群博客文章。

安装helm

-

下载helm。(master节点机器)

1

2

3

4

5mkdir -p /etc/tiller

cd /etc/tiller

wget https://github.com/helm/helm/releases/tag/v2.16.2若下载时间较长,可先下载压缩包,在上传服务器,Helm国内下载站。

-

安装。(master节点机器)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32tar -zxvf helm-v2.16.2-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm

# 验证

helm version

#创建rbac权限文件

cat > helm-rbac.yaml << EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

EOF

kubectl apply -f helm-rbac.yaml

serviceaccount/tiller created

clusterrolebinding.rbac.authorization.k8s.io/tiller created

安装tiller

1 | # master节点机器上执行 |

安装 OpenEBS

-

去除master节点的污点。(master01机器)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18kubectl get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master01 Ready master 8h v1.17.0 192.168.18.111 <none> CentOS Linux 8 4.18.0-348.el8.x86_64 docker://19.3.15

master02 Ready master 5h18m v1.17.0 192.168.18.112 <none> CentOS Linux 8 4.18.0-348.el8.x86_64 docker://19.3.15

node01 Ready <none> 4h19m v1.17.0 192.168.18.113 <none> CentOS Linux 8 4.18.0-348.el8.x86_64 docker://19.3.15

node02 Ready <none> 4h17m v1.17.0 192.168.18.114 <none> CentOS Linux 8 4.18.0-348.el8.x86_64 docker://19.3.15

kubectl describe node master01 | grep Taint

Taints: node-role.kubernetes.io/master:NoSchedule

kubectl describe node master02 | grep Taint

Taints: node-role.kubernetes.io/master:NoSchedule

kubectl taint nodes master01 node-role.kubernetes.io/master:NoSchedule-

node/master01 untainted

kubectl taint nodes master02 node-role.kubernetes.io/master:NoSchedule-

node/master02 untainted -

安装 OpenEBS。(master01机器)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

# 创建 OpenEBS 的 namespace,OpenEBS 相关资源将创建在这个 namespace 下

kubectl create ns openebs

# 安装 OpenEBS

# 若集群已安装了 Helm,可通过 Helm 命令来安装 OpenEBS

helm init

helm install --namespace openebs --name openebs stable/openebs --version 1.5.0

# 还可以通过 kubectl 命令安装。

kubectl apply -f https://openebs.github.io/charts/openebs-operator-1.5.0.yaml

# 以上执行是找不到文件,因此不加版本号可以找到(此次采用)

kubectl apply -f https://openebs.github.io/charts/openebs-operator.yaml

# 安装 OpenEBS 后将自动创建 StorageClass,查看创建的 StorageClass

kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

openebs-device openebs.io/local Delete WaitForFirstConsumer false 17s

openebs-hostpath openebs.io/local Delete WaitForFirstConsumer false 17s

# 将 openebs-hostpath设置为默认的 StorageClass

kubectl patch storageclass openebs-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

# 至此,OpenEBS 的 LocalPV 已作为默认的存储类型创建成功。可以通过命令来查看 OpenEBS 相关 Pod 的状态,若 Pod 的状态都是 running,则说明存储安装成功。

kubectl get pod -n openebs

NAME READY STATUS RESTARTS AGE

openebs-localpv-provisioner-65967b8999-mb4th 1/1 Running 0 3m13s

openebs-ndm-2ksfm 1/1 Running 0 3m14s

openebs-ndm-2rqxl 1/1 Running 0 3m14s

openebs-ndm-cluster-exporter-7b76d67f6f-qsw7j 1/1 Running 0 3m13s

openebs-ndm-node-exporter-7lw4t 1/1 Running 0 3m13s

openebs-ndm-node-exporter-7qssk 1/1 Running 0 3m13s

openebs-ndm-node-exporter-h7f47 1/1 Running 0 3m13s

openebs-ndm-node-exporter-sgklc 1/1 Running 0 3m13s

openebs-ndm-operator-5f64b4967d-6qx5v 1/1 Running 0 3m14s

openebs-ndm-pkqnv 1/1 Running 0 3m14s

openebs-ndm-stbmr 1/1 Running 0 3m14s

安装kubesphere

-

最小化安装 KubeSphere。(master01机器)

若集群可用 CPU > 1 Core 且可用内存 > 2 G,可以使用以下命令最小化安装 KubeSphere。(此次采用)

1

2

3

4

5

6

7wget https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/kubesphere-installer.yaml

wget https://github.com/kubesphere/ks-installer/releases/download/v3.0.0/cluster-configuration.yaml

kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml -

检查安装日志。(master01机器)

1

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

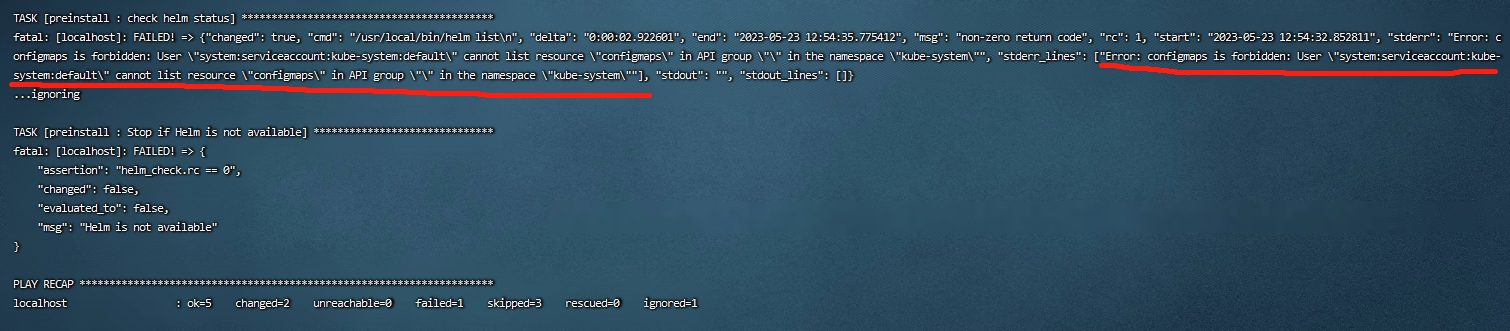

错误意思是pod用namespace kube-system 默认的serviceaccout是没有权限访问K8s的 API group的。

1

2

3

4# 创建namespace kube-system 的serviceaccout tiller ,使用 tiller 访问 K8s 的 API group

kubectl create serviceaccount --namespace kube-system tiller

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}' -

查看所有 Pod 是否在 KubeSphere 的相关命名空间中正常运行。(master01机器)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-2xlwb 1/1 Running 0 6h21m

kube-flannel kube-flannel-ds-49kjf 1/1 Running 0 4h52m

kube-flannel kube-flannel-ds-g7668 1/1 Running 0 4h51m

kube-flannel kube-flannel-ds-th9lp 1/1 Running 0 5h51m

kube-system coredns-9d85f5447-6chrb 1/1 Running 0 9h

kube-system coredns-9d85f5447-t76bk 1/1 Running 0 9h

kube-system etcd-master01 1/1 Running 0 9h

kube-system etcd-master02 1/1 Running 0 5h51m

kube-system kube-apiserver-master01 1/1 Running 0 9h

kube-system kube-apiserver-master02 1/1 Running 0 5h51m

kube-system kube-controller-manager-master01 1/1 Running 1 9h

kube-system kube-controller-manager-master02 1/1 Running 0 5h51m

kube-system kube-proxy-k45db 1/1 Running 0 9h

kube-system kube-proxy-lh5bq 1/1 Running 0 5h51m

kube-system kube-proxy-ljz5l 1/1 Running 0 4h52m

kube-system kube-proxy-wsxzr 1/1 Running 0 4h51m

kube-system kube-scheduler-master01 1/1 Running 1 9h

kube-system kube-scheduler-master02 1/1 Running 0 5h51m

kube-system tiller-deploy-7cc57f94dc-gvnln 1/1 Running 0 40m

kubesphere-system ks-installer-75b8d89dff-cf4d5 1/1 Running 0 88s

openebs openebs-localpv-provisioner-65967b8999-mb4th 1/1 Running 0 15m

openebs openebs-ndm-2ksfm 1/1 Running 0 15m

openebs openebs-ndm-2rqxl 1/1 Running 0 15m

openebs openebs-ndm-cluster-exporter-7b76d67f6f-qsw7j 1/1 Running 0 15m

openebs openebs-ndm-node-exporter-7lw4t 1/1 Running 0 15m

openebs openebs-ndm-node-exporter-7qssk 1/1 Running 0 15m

openebs openebs-ndm-node-exporter-h7f47 1/1 Running 0 15m

openebs openebs-ndm-node-exporter-sgklc 1/1 Running 0 15m

openebs openebs-ndm-operator-5f64b4967d-6qx5v 1/1 Running 0 15m

openebs openebs-ndm-pkqnv 1/1 Running 0 15m

openebs openebs-ndm-stbmr 1/1 Running 0 15m -

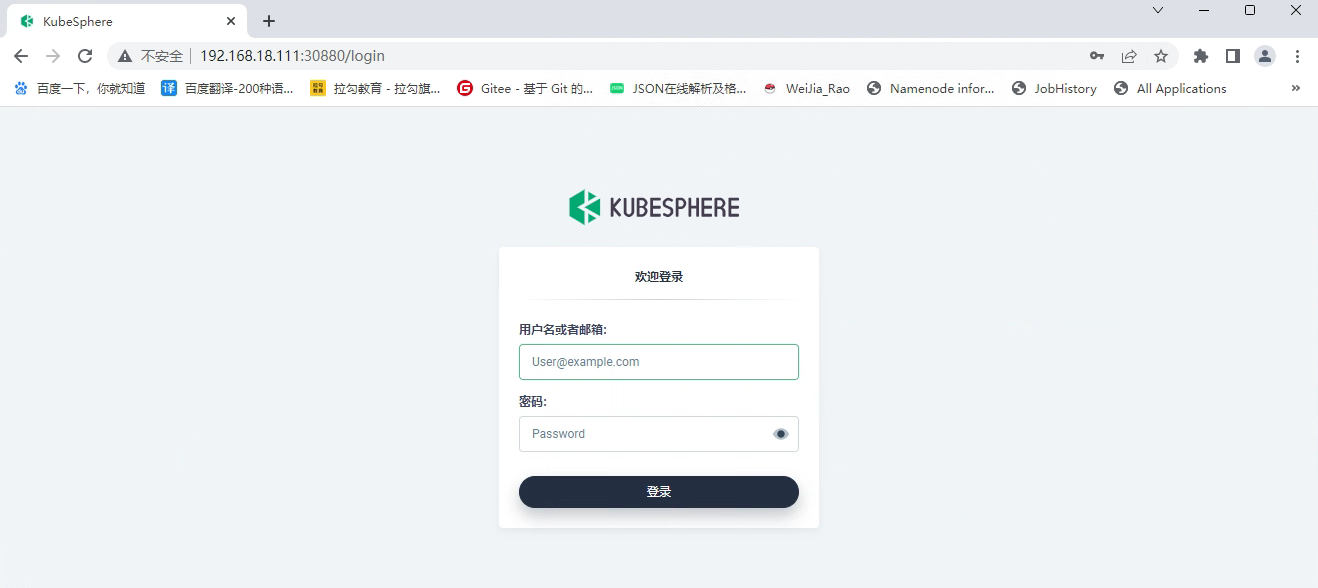

访问 Web 控制台

通过 NodePort (IP:30880)(所有的节点机器的IP包括虚拟IP) 使用默认帐户和密码 (admin/P@88w0rd) 访问 Web 控制台

-

查看所有 Pod 是否在 KubeSphere 的相关命名空间中正常运行

1

2kubectl get pod --all-namespaces

kubectl get svc/ks-console -n kubesphere-system

启用可插拔功能组件

安装kubesphere前

-

编辑 cluster-configuration.yaml 文件

1

vi cluster-configuration.yaml

-

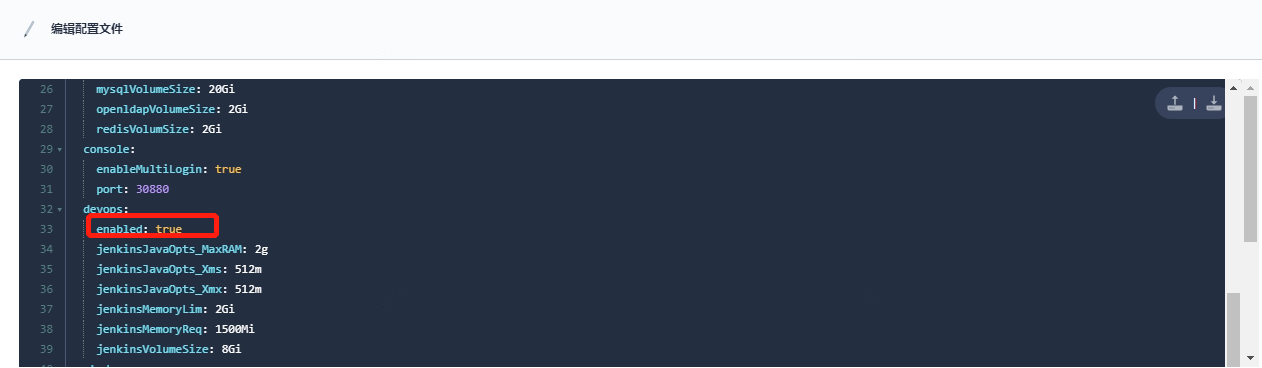

在 cluster-configuration.yaml 文件中,找到启用的组件,如 devops ,openpitrix等,查看详情。

1

enabled: true # Change "false" to "true"

-

重新安装

1

2

3kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

安装kubesphere后

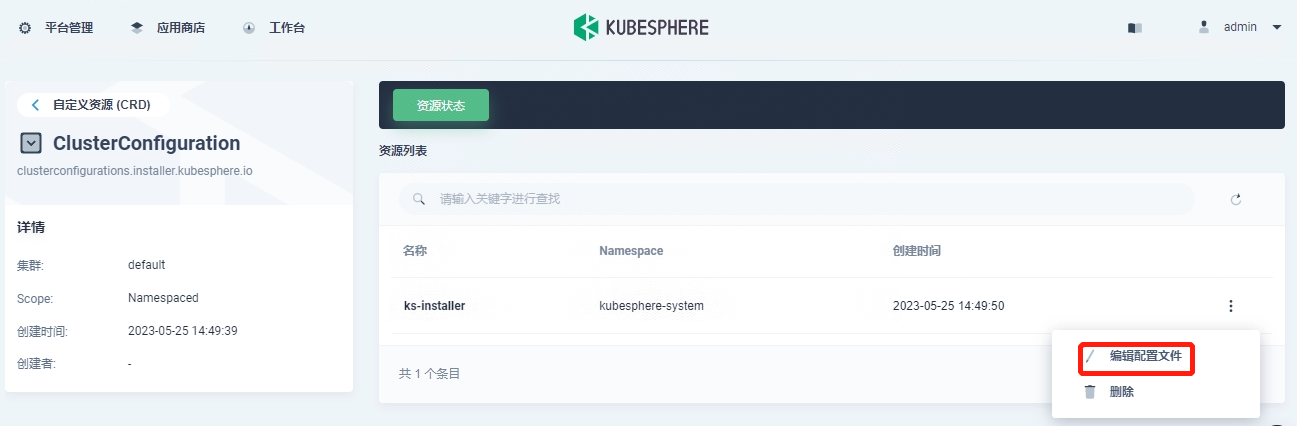

以 admin 身份登录控制台。点击左上角的平台管理 ,然后选择集群管理。

点击自定义资源 CRD,然后在搜索栏中输入 clusterconfiguration,点击搜索结果进入其详情页面。

在资源列表中,点击 ks-installer 右侧的三个点,然后选择编辑配置文件。

在该配置文件中,将对应组件 enabled 的 false 更改为 true,以启用要安装的组件。完成后,点击更新以保存配置。

您可以通过点击网页控制台右下角的锤子图标来找到 Web kubectl 工具。

1 | # 查看日志 |

登录 KubeSphere 控制台,在服务组件中可以查看不同组件的状态。