核心交易分析之数据导入

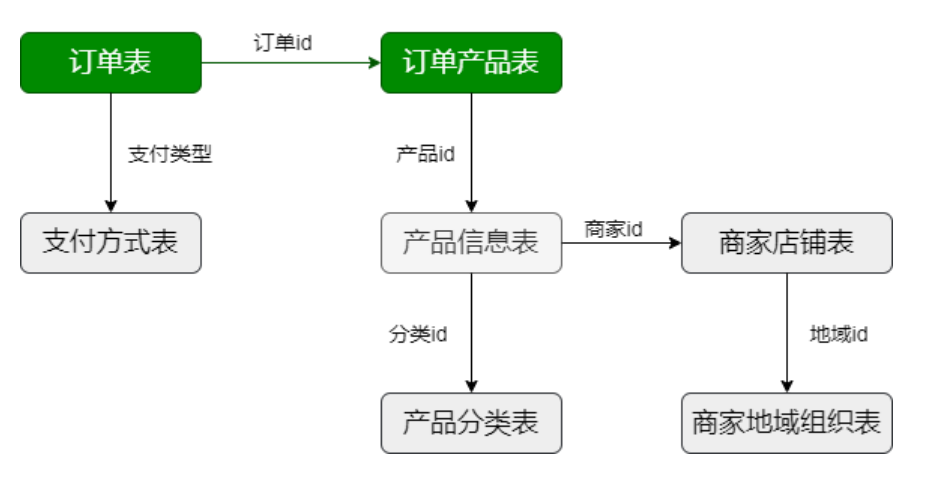

已经确定的事情:DataX、导出7张表的数据。

MySQL导出:全量导出、增量导出(导出前一天的数据)。

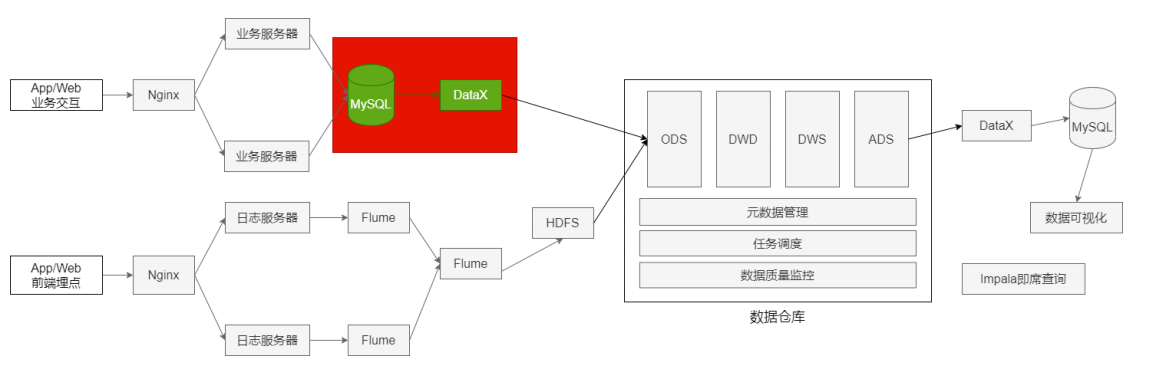

业务数据保存在MySQL中,每日凌晨导入上一天的表数据。

-

表数据量少,采用全量方式导出MySQL。

-

表数据量大,而且根据字段能区分出每天新增数据,采用增量方式导出MySQL。

3张增量表:

-

订单表 lagou_trade_orders

-

订单产品表 lagou_order_produce

-

产品信息表 lagou_product_info

4张全量表:

-

产品分类表 lagou_product_category

-

商家店铺表 lagou_shops

-

商家地域组织表 lagou_shop_admin_org

-

支付方式表 lagou_payment

全量数据导入

MySQL => HDFS => Hive

每日加载全量数据,形成新的分区;(ODS如何建表有指导左右)

MySQLReader ===> HdfsWriter

产品分类表

创建/data/script/datax_json/product_category.json

1 | cd /data/script/datax_json |

product_category.json文件内容:

1 | { |

备注:

-

数据量小的表没有必要使用多个channel;使用多个channel会生成多个小文件

-

执行命令之前要在HDFS上创建对应的目录:/user/hive/warehouse/trade.db/lagou_product_category/dt=yyyy-mm-dd

1 | [root@Linux123 ~]# do_date='2020-07-01' |

商家店铺表

创建/data/script/datax_json/shops.json

1 | cd /data/script/datax_json |

shops.json文件内容:

1 | { |

1 | [root@Linux123 ~]# do_date='2020-07-01' |

商家地域组织表

创建/data/script/datax_json/shop_org.json

1 | cd /data/script/datax_json |

shop_org.json文件内容:

1 | { |

1 | [root@Linux123 ~]# do_date='2020-07-01' |

支付方式表

创建/data/script/datax_json/payments.json

1 | cd /data/script/datax_json |

payments.json文件内容:

1 | { |

1 | [root@Linux123 ~]# do_date='2020-07-01' |

增量数据导入

初始数据装载(执行一次);可以将前面的全量加载作为初次装载。

每日加载增量数据(每日数据形成分区)。

交易订单表

创建/data/script/datax_json/orders.json

备注:条件的选择,选择时间字段 modifiedTime

1 | cd /data/script/datax_json |

orders.json文件内容:

1 | { |

1 | [root@Linux123 ~]# do_date='2020-07-12' |

订单产品表

创建/data/script/datax_json/order_product.json

1 | cd /data/script/datax_json |

order_product.json文件内容:

1 | { |

1 | [root@Linux123 ~]# do_date='2020-07-12' |

产品信息表

创建/data/script/datax_json/product_info.json

1 | cd /data/script/datax_json |

product_info.json文件内容:

1 | { |

1 | [root@Linux123 ~]# do_date='2020-07-12' |